I was able to use Llama 3.1 from Hugging face released (2 days ago).

Llama 3.1 work at least as well at ChatGPT 4o and costs only your home electricity to host on your own server

On a computer with Microsoft windows, download LM studio https://lmstudio.ai

On LM studio command prompt download Llama 3.1 whichever version fits into your GPU (I chose a 16 GB version)

Use load the LM studio into a server on a server tab

go into cmd.exe:

ipconfigDownload VS code and get the ‘Reborn AI’ plugin

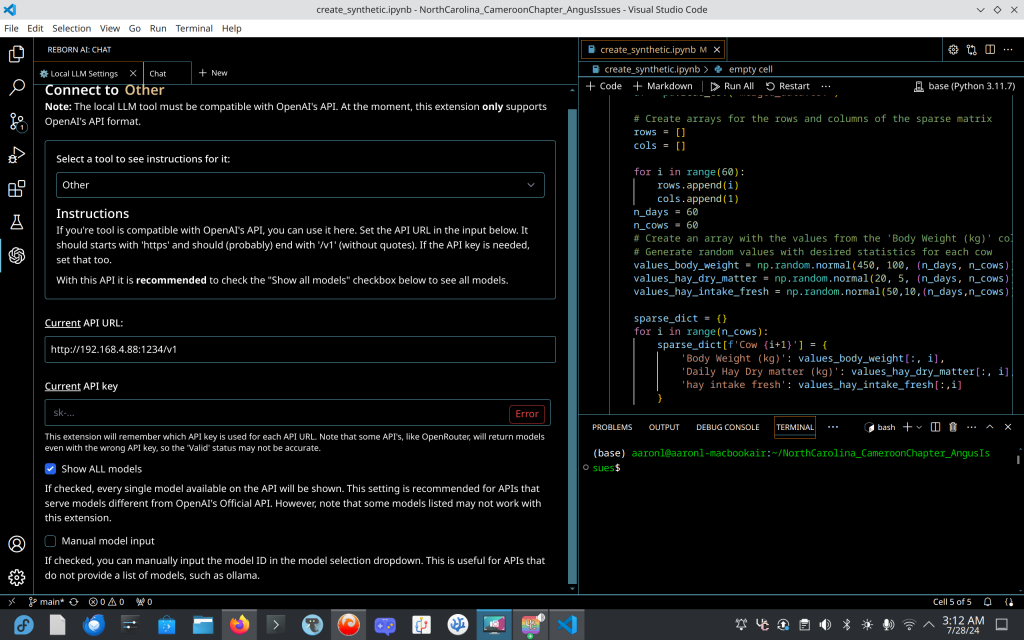

Purpose Reborn AI into ‘other’ category of LLM’s and local LLM’s put your local IP address into the system.

Reborn AI will allow for code block cutting and pasting and allows for commentary into the Llama3.1 for code execution.

Here is an example:

There is three separate reponses, full, concise, and just code. Also you can select the select code block to enter into the prompt. It’s really useful and Meta Llama3.1 has a huge token context length much greater than other models.